VR-NEXT Engine: How to use it and Roadmap

3D model rendering engine that has realistic physical characteristics like material and light.

●Price TBD

●Release date Summer, 2019

In this topic, we will introduce the VR-NEXT application under development that we explained on Up&Coming Vol.124, mainly about expected ways of use.

Features

VR-NEXT has 4 features.

Physical Base Rendering (PBR)

Physical Base Rendering (PBR) is a photorealistic 3D model rendering method that has realistic physical characteristics like material and light. (Refer to Up&Coming Vol.124 for the details.)

Cross-platform

This application corresponds to the combination of several hardware and OS.

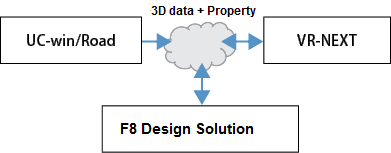

Cooperation with cloud

It displays 3D data on cloud and manages the whole data.

Software platform

It will be developed with C++, and we will also provide the SDK that users can add other application and module to it.

Expected application

CAD data visualization, VR simulation

This 3D application allows users to visualize information and execute the real-time rendering of simulation and analysis result. Also, cloud cooperation can visualize big data. In addition, a SDK module that the development of cloud linkage function by script language can be simply added is under consideration.

Lighting simulation

The Physical Base Rendering helps users to calculate the brightness in video as real physical value by merging the light and calculating the reflection, and can export physical amount and create HDR image. Not only the sunlight but also advanced lighting including street light and ramp can also be simulated.

Wearable device + AR

The engine corresponded to the cross platform enables applications using wearable device and AR. Various usages for facility management, on-site supervision, work support or work training support, tourism, and education can be expected.

Example: AR display of wiring data

Embedded system

VR-NEXT can be taken in embedded systems of facility, robot, or automobile to provide information more smoothly and interface construction for users.

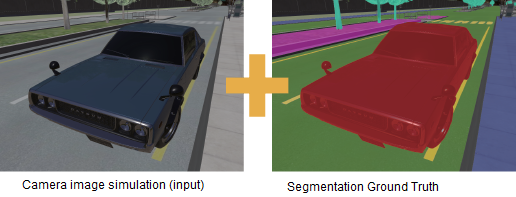

Camera sensor simulation and AI development

This product is using the lens distortion calculation and camera simulation developed with UC-win/Road, and it can be utilized for the camera sensor simulation and the big data creation for machine learning by high-performance and high-precise video creation.

Development roadmap

| Ver.1 Summer 2019 |

The main body is the rendering engine, and it corresponds to UC-win/Road VR data and standard 3D data format.  |

| Ver.2 |

Release the cloud cooperation function.  |

| Ver.3 |

Release a new version of the rendering engine and the real-time linkage with UC-win/Road. |

| Ver.4 |

Release a SDK for users. |