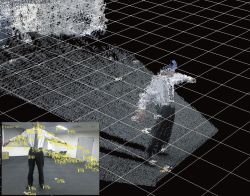

The technology of PrimeSense, Ltd. is used for the infrared depth sensor for each. Irradiating with infrared laser from one side of the lens, reflection light is read on the other side of the lens (Figure 2). The green lens is laser and the opposite side receives the light for the sensor of figure 1.

The middle of the lens of Xtion PRO, Kinect(TM) is RGB camera. Only infrared depth sensor is mounted on Xtion PRO.

Xtion PRO LIVE and Kinect(TM) are multifunctional devices with the other functions (Chart 1).

| XtionPRO | Xtion PRO LIVE | Kinect(TM) sensor |

|

|

|

|

|

| Function | Xtion PRO | Xtion PRO LIVE | Kinect(TM) |

| Infrared depth sensor | 640X480(VGA)/30fps 320X240(QVGA)/60fps |

||

| RGB Camera | X | 1280X1024 (SXGA) |

640X480(VGA)/30fps 320X240(QVGA)/60fps |

| AudioMicrophone | X | 2 | 4 |

| Tilt Motor | X | X | o |

| Access System | USB2.0 | USB2.0 | USB2.0 |

| Power supplier | USB bus power | USB bus power | AC adapter |

DTK supports the functions of infrared depth sensor and RGB camera. The effective range of infrared depth sensor is Xtion PRO, Xtion PRO LIVE, and the nominal value is from 0.8m to 3.5m. The nominal value for Kinect(TM) is from 1.2m to 3.5m.